Blog

Comment: Gender diversity in AIM boardrooms: another year of standing still

Valentina Dotto, Policy Adviser at CGIUKI, reflects on why the latest Gender Diversity in AIM Company Boards report exposes a market stuck on progress, why board diversity matters for effective governance and competitiveness, and why deliberate action is now essential if AIM companies are to close the widening gap with the FTSE 350.

Blog

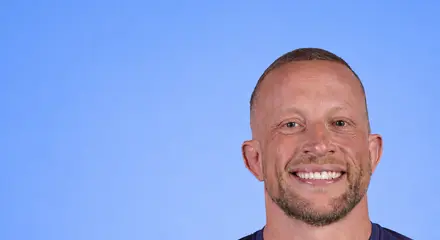

Comment: I’m a professional rugby player, this is why governance matters for players and the game

Professional rugby player and Chair of the RPA Men’s Rugby Board, Max Lahiff reflects on why governance matters to players, how it shapes decisions on welfare and sustainability, and why strengthening governance capability is essential to the future of the game.

Blog

Comment: Governance is rising in East Africa, and the opportunities have never been greater

Barbara Daisy Nabuweke is the Company Secretary at the East African Crude Oil Pipeline (EACOP) Limited . In this comment article she shares her career pivot from law into governance and why now is the perfect time to pursue a career in the industry.

Blog

Comment: Results day was a defining moment in my career

Tom Llewelyn is Company Secretary and Head of Governance at The Cambridge Building Society. He recently completed The Chartered Governance Institute’s Qualifying Programme and in this comment piece, he shares his journey into the world of governance and why completing the QP was transformative for his career.

Blog

Formula One governance agreement 2026

Formula One’s 2026 Concorde Agreement has quietly reshaped the sport’s power structures by separating commercial terms from governance for the first time. The move reflects growing pressure on the FIA and F1 to demonstrate transparency, accountability and faster decision‑making.

Blog

Moving the goalposts? Governance lessons from football’s high-profile managerial exits

High profile exits at Manchester United and Chelsea have thrown football’s governance into sharp relief. Under intense financial and reputational pressure, unclear roles, blurred oversight and weak challenge mechanisms can destabilise clubs. A new regulator raises baseline standards, but only disciplined governance behaviour and culture can keep organisations resilient.

Blog

Can governance reform restore confidence in UK Universities?

UK universities face intense scrutiny and financial strain. This blog examines how governance reform, anchored in the forthcoming revision of the CUC Higher Education Code of Governance, can strengthen assurance, clarify roles and rebuild confidence across a diverse sector.